HOME / POSTS / GENERALIZATION BOUNDED IMPLICIT LEARNING OF NEARLY DISCONTINUOUS FUNCTIONS

Generalization Bounded Implicit Learning of Nearly Discontinuous Functions

June 2022 Bianchini

Project Documents

This post goes along with the paper I published at the 4th annual Learning for Dynamics and Control (L4DC) in 2022. My co-authors are fellow Penn PhD student, Mathew Halm, as well as Penn faculty Michael Posa and Nikolai Matni.

Interested readers can refer to our paper for all of the details (on publisher page, or on arxiv including extended appendices). However this post should provide a high-level sense of the contributions.

Paper abstract

Inspired by recent strides in empirical efficacy of implicit learning in many robotics tasks, we seek to understand the theoretical benefits of implicit formulations in the face of nearly discontinuous functions, common characteristics for systems that make and break contact with the environment such as in legged locomotion and manipulation. We present and motivate three formulations for learning a function: one explicit and two implicit. We derive generalization bounds for each of these three approaches, exposing where explicit and implicit methods alike based on prediction error losses typically fail to produce tight bounds, in contrast to other implicit methods with violation-based loss definitions that can be fundamentally more robust to steep slopes. Furthermore, we demonstrate that this violation implicit loss can tightly bound graph distance, a quantity that often has physical roots and handles noise in inputs and outputs alike, instead of prediction losses which consider output noise only. Our insights into the generalizability and physical relevance of violation implicit formulations match evidence from prior works and are validated through a toy problem, inspired by rigid-contact models and referenced throughout our theoretical analysis.

Citation

@inproceedings{bianchini2022generalization,

title = {Generalization Bounded Implicit Learning of Nearly Discontinuous Functions},

author = {Bianchini, Bibit and Halm, Mathew and Matni, Nikolai and Posa, Michael},

booktitle = {Proceedings of The 4th Annual Learning for Dynamics and Control Conference (L4DC)},

pages = {1112--1124},

year = {2022},

editor = {Firoozi, Roya and Mehr, Negar and Yel, Esen and Antonova, Rika and Bohg, Jeannette and Schwager, Mac and Kochenderfer, Mykel},

volume = {168},

series = {Proceedings of Machine Learning Research},

month = {23--24 Jun},

publisher = {PMLR},

url = {https://proceedings.mlr.press/v168/bianchini22a.html},

arxiv = {2112.06881}

}

Motivation

I joined DAIR Lab's ContactNets project (arxiv, github), which empirically demonstrated strong data efficiency in building a dynamics model (initially just geometry and friction) of an unknown object by observing its trajectory through contact-rich, impact-ridden interactions. The model could be learned accurately enough to offer strong predictive abilities. The performance was compared against an end-to-end learned model trained on prediction loss, which learned models of lower accuracy and exhibited dynamics predictions that violated physical realism (e.g., interpenetration of rigid objects).

My motivation with this paper was to explore what theory supports these strong empirical results. We did so through two lenses: 1) bounding the generalization error, and 2) relating losses to the physical quantity of "graph distance", or equivalently total least squares or errors in variables distance.

3 compared approaches

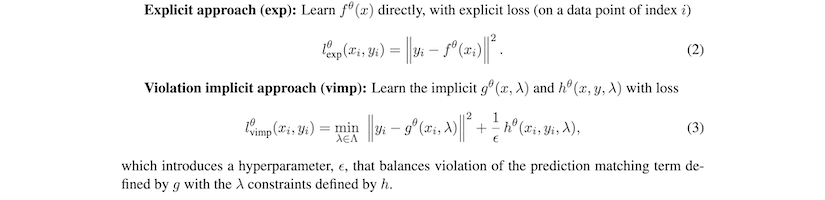

Our paper looks at three approaches, which we call explicit (exp), naive implicit (nimp), and violation implicit (vimp). Vimp is our approach, and features a loss function that allows for but penalizes violation of physics-based dynamics constraints.

Toy problem

Figure 1: A toy problem used throughout the paper of a point mass falling under gravity and colliding inelastically with the ground (left). The loss landscapes of the 3 studied approaches are depicted in the right two plots.

While our paper's analysis holds for general classes of equations, we remind readers that these classes are physically relevant by returning to a toy problem throughout the sections. The toy problem is 1-dimensional with perfect inelasticity, modeled in discrete time.

Generalization error

We combine prior works using Rademacher complexity and Dudley's entropy integral to bound the generalization error of our studied approaches. These generalization error bounds are in terms of Lipschitz constants of the three losses with respect to the learned parameters. Our paper provides closed-form expressions for these Lipschitz constants (equations 11-13).

Figure 2: The generalization error bounds as a function of dataset size for the 3 approaches (left) and as a function of failure probability for the vimp approach (right) for the toy problem.

Some take-aways from our analysis:

- When the set of learned parameters is shared across the three approaches, there is no difference between the exp and nimp approaches in terms of their generalization error bounds. Thus, any inherent stiffnesses in the explicit approach will be inherited by naive implicit approaches based on prediction loss.

- The vimp approach, in contrast, can avoid this pitfall because of essentially regularizing the stiffness.

Specifically on the paper's toy problem, these take-aways manifest as:

- The exp and nimp approaches have generalization error bounds that scale with the reciprocal of the time step. That is, the finer the resolution, and thus the higher the accuracy, of the discrete time simulator, the less we can say about how the exp and nimp approaches will perform on new data -- in fact, in the limit, we can say nothing since the bound blows up to infinity with a zero time step.

- The vimp approach, in contrast, provably generalizes with a bound that is independent of time step. With reasonable values for these parameters, we show that the vimp approach exhibits over an order of magnitude reduction in generalization error bound.

Graph distance

Figure 3: Illustration of graph distance versus prediction error.

We also include a section about how the three loss formulations relate to graph distance, or total least squares, or errors in variables distance. This quantity handles noise in inputs and outputs alike. For smooth functions, graph distance approaches prediction loss. However, these metrics can differ drastically around high stiffness regions.

We use quadratic growth arguments to prove that the vimp loss formulation can tightly bound graph distance, giving the vimp loss a physically meaningful property, and ensuring that our aims of minimizing generalization error and training loss are in pursuit of a quality model.

Presentation at L4DC 2022

I presented this work at the L4DC 2022 conference hosted by Stanford University, where we received quality feedback from other attendees. See the attached poster pdf (at the top of this post) for the visual I used during my paper poster session.

Figure 4: Me at the conference's diversity dinner (4th from left).

bianchini-love engineering

bianchini-love engineering