HOME / POSTS / LIVE CONTACTNETS DEMO WITH FRANKA ARM

Live ContactNets Demo with Franka Arm

May 2022 Bianchini

At the International Conference on Robotics and Automation (ICRA) in 2022 in Philadelphia, we took advantage over the conference being in our neighborhood and performed live demonstrations of our ContactNets project. The demo featured a Franka Panda robotic arm tossing a test object onto a table, and learning the object's geometry just by observing its contact-rich trajectory. My collaborators are fellow Penn PhD student Mathew Halm, Penn masters student Kausik Sivakumar, and Penn faculty Michael Posa.

Video 1: A short video of the demonstration.

Overview

The demonstration consists of the Franka robot repeatedly tossing an unknown object, while an instance of ContactNets uses the toss trajectories to train a geometry mesh and a friction parameter. The object mesh is viewable from a browser window, with options to see the mesh continuously spinning or to interact with the mesh by panning around on the webpage.

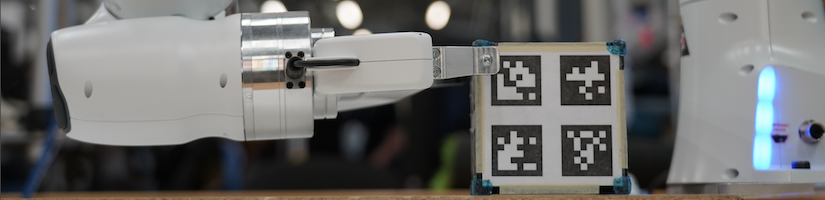

Figure 1: The Franka goes to pick up the object.

While more data means a higher quality model, we are particularly interested in the low data regimes. With our novel training setup (see our L4DC 2022 paper and my RSS 2022 presentation for more of those details), we see good convergence on the object mesh and frictional parameter at around 10-20 tosses.

Setup

Physical setup

Figure 2: The physical setup of the demo.

Many of the necessary components for running the demo are pictured above. Note that while our example object is a cube, any convex shape would work as long as we configure TagSLAM to track the new object. Unpictured components include:

- the computers (noted in the network connections diagram),

- the Franka control box (noted in the network connections diagram), and

- the camera calibration board.

Figure 3: The network setup of the devices.

The network connections between the computers, robot arm components, and cameras are depicted in the diagram above. Note that internet access is not required to run this demo.

Computer 1

Computer 1 is otherwise known as the “Franka desktop” and does the following:

- Communicates with the Franka arm, requiring a realtime kernel operating system. We use the Franka ROS Interface package, which let us access 1) high-level MoveIt! commands to pick up the object and set up for a toss while avoiding obstacles, and 2) low-level joint position and velocity commands for executing the more dynamic toss. Our tossing script tosses indefinitely until manually stopped, including pausing for human intervention if the object somehow ends up out of reach.

- Runs three black and white Point Grey cameras, used to run TagSLAM used for localizing the object in the scene, online at low frequency (10Hz).

- Records high frequency camera frames (90Hz) during all object tosses into a rosbag, and pauses the online TagSLAM to process the high frequency camera frames offline while the robot sets up for the next toss.

- Copies the high frequency object odometry recording over to Computer 2.

Computer 2

Computer 2 takes care of everything beyond the data collection step:

- Monitors for new trajectories shared by Computer 1. Upon arrival, it selects the relevant portions of the toss trajectories (i.e. cuts off any robot contact states at the beginning and gets rid of excess resting time at the end) into the dataset folder.

- Runs an instance of DAIR Physics Learning Library (PLL) that references the dataset folder to refresh its dataset at every epoch, and trains a geometry mesh and frictional parameter for the object.

- Renders the object mesh in real-time as the PLL model updates, allowing viewers to pan around the object for inspection.

bianchini-love engineering

bianchini-love engineering